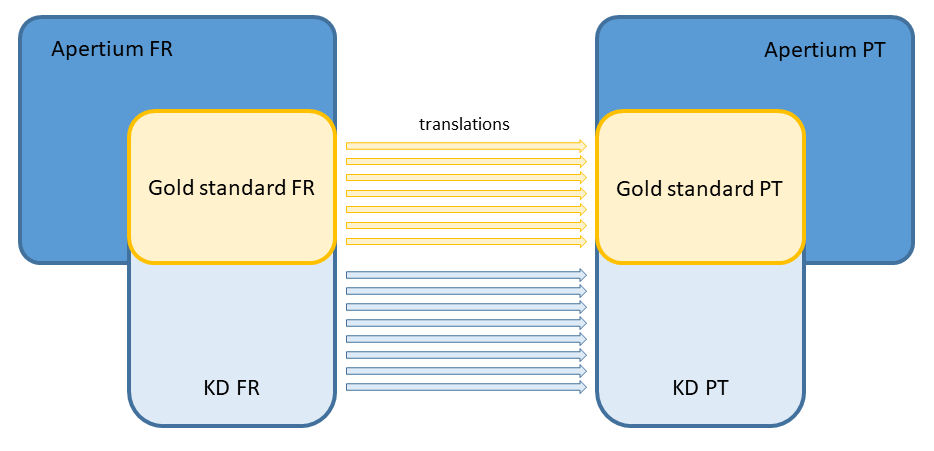

Gold standard

To

build the golden standard, we extracted translations from

manually compiled pairs of K Dictionaries (KD), particularly

its Global series. The coverage of KD is not the same as

Apertium. To allow comparisons, we took the subset of KD

that is covered by Apertium to build the gold standard,

i.e., those KD translations for which the source and target

terms are present in both Apertium RDF source and target

lexicons. The gold

standard remained hidden to participants. Graphically, for

the FR-PT pair:

Evaluation process

For each system results file, and per language pair, we will

1.Remove duplicated translations (some systems might produce

duplicated rows, i.e., identical source and target words, POS

and confidence degree).

2. Filter out translations for which the source entry or

the target entry are not present in the golden standard

(otherwise we cannot assess whether the translation is correct

or not). Let’s call systemGS the subset of

translations that pass this filter.

3. Translations with confidence degree under a given threshold

will be removed from systemGS. In principle, the used

threshold will be the one reported by participants as the

optimal one during the training/preparation phase.

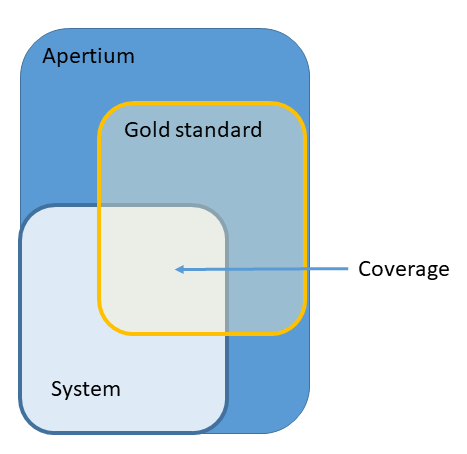

4. Compute the coverage of the system (i.e., how many

entries in the source language were translated?) with respect

to the gold standard. Graphically, for the source language:

5. Compute precision as P =(#correct translations

in systemGS) / |systemGS|

6. Compute recall as R =(#correct translations in

systemGS) / |GS|

where GS is the set of translations in the gold standard for a

given language pair

7. Compute F-measure as F=2*P*R/(P+R)

NOTE: The precision/recall metrics calculated after applying the filtering process explained in point 3 corresponds to what in [1] is defined as both-word precision and both-word recall. The idea is to reduce the penalization to a system for inferring correct translations that are missing in the golden standard dictionary because human editors might have overlooked them when elaborating the dictionary. Notice that in previous TIAD editions we only filtered out translations for which the source entry were not present in the translation (which led to computing the so-called one-word precision/recall) thus only partially covering such goal.

Baselines

We have computed results also with respect to two baselines:

Baseline 1 [Word2Vec]. The method is using Word2Vec [2] to transform the graph into a vector space. A graph edge is interpreted as a sentence and the nodes are word forms with their POS-Tag. Word2Vec iterates multiple times over the graph and learns multilingual embeddings (without additional data). We use the Gensim Word2Vec Implementation. For a given input word, we calculate a distance based on the cosine similarity of a word to every other word with the target-pos tag in the target language. The square of the distance from source to target word is interpreted as the confidence degree. For the first word the minimum distance is 0.62, for the others it is 0.82. So multiple results are only in the output if the confidence is not extremely weak. In our evaluation, we applied an arbitrary threshold of 0.5 to the confidence degree. You can find the code here. NOTE: In the TIAD'21 and TIAD'22 editions the Word2Vec baseline, although based on the same principles, has been re-implemented and re-trained to be adapted to the new Apertium RDF v2 dataset, and lead to different (generally better) results than in the previous TIAD editions.

Baseline 2 [OTIC]. The One Time Inverse

Consultation (OTIC) method was proposed by Tanaka and

Umemura [3] in 1994, and adapted by Lin et. al [4] for the

creation of multilingual lexicons. In short, the idea of the

OTIC method is to explore, for a given word, the possible

candidate translations that can be obtained through

intermediate translations in the pivot language. Then, a score

is assigned to each candidate translation based on the degree

of overlap between the pivot translations shared by both the

source and target words. In our evaluation, we have applied

the OTIC method using Spanish as pivot language, and using an

arbitrary threshold of 0.5. You can find the code here.

NOTE: Same as the Word2Vec baseline, the OTIC baseline was

re-run for TIAD'21 and TIAD'22 to be adapted to the new

Apertium RDF v2 dataset. The results are generally worse

than in TIAD'20 (with the smaller Apertium RDF v1 graph).

Evaluation results

NOTE: A direct comparison between these results with such of previous TIAD editions is only relative because of two reasons: (1) the use of a new development dataset in TIAD'21, which is the larger Apertium RDF v2 graph instead of Apertium RDF v1, which lead to a different gold standard (Apertium-KD intersection) and (2) the use of improved metrics in the evaluation, as explained above: both-word precision and both-word recall [1].

Table 1: averaged systems results. Results per language pair have been averaged for every system and ordered by F-measure in descending order. The results correspond to the optimal threshold reported by every team, or an arbitraty 0.5 threshold when no preferred threshold was indicated.

Table 1b: Averaged systems results for both TIAD'21 and TIAD'22 systems

Table 2.1: Systems results for EN-PT.

Table 2.2: Systems results for EN-FR.

Table 2.3: Systems results for FR-EN.

Table 2.4: Systems results for FR-PT.

Table 2.5: Systems results for PT-EN .

Table 2.6: Systems results for PT-FR.

Results with variable threshold (averaged across

language pairs):

Table 3.1: Results for SATLab.

Table 3.2: Results for fastRP_ES.

Table 3.3: Results for fastRP_fastText_ES_CA.

Table 3.4: Results for fastRP_fastText_ES.

Table 3.5: Results for baseline-Word2Vec.

Table 3.6: Results for baseline-OTIC.

References

[1] S: Goel, J. Gracia, and M. L. Forcada, "Bilingual

dictionary generation and enrichment via graph exploration"

[IN PRESS], Semantic Web Journal, 2022

[2] T. Mikolov, K. Chen, G. Corrado, and J. Dean, "Efficient Estimation of Word Representations in Vector Space", 2013.

[3] K. Tanaka and K. Umemura. "Construction of a bilingual dictionary intermediated by a third language". In COLING, pages 297–303, 1994.

[4] L. T. Lim, B. Ranaivo-Malançon, and E. K. Tang. "Low cost construction of a multilingual lexicon from bilingual lists". Polibits, 43:45–51, 2011.